Criminal data is becoming more vast, complex, and fragmented. AI-powered investigative systems offer immense potential to accelerate intelligence analysis, but only if they meet AI compliance standards and emerging regulations designed to mitigate risks to fundamental rights and safety.

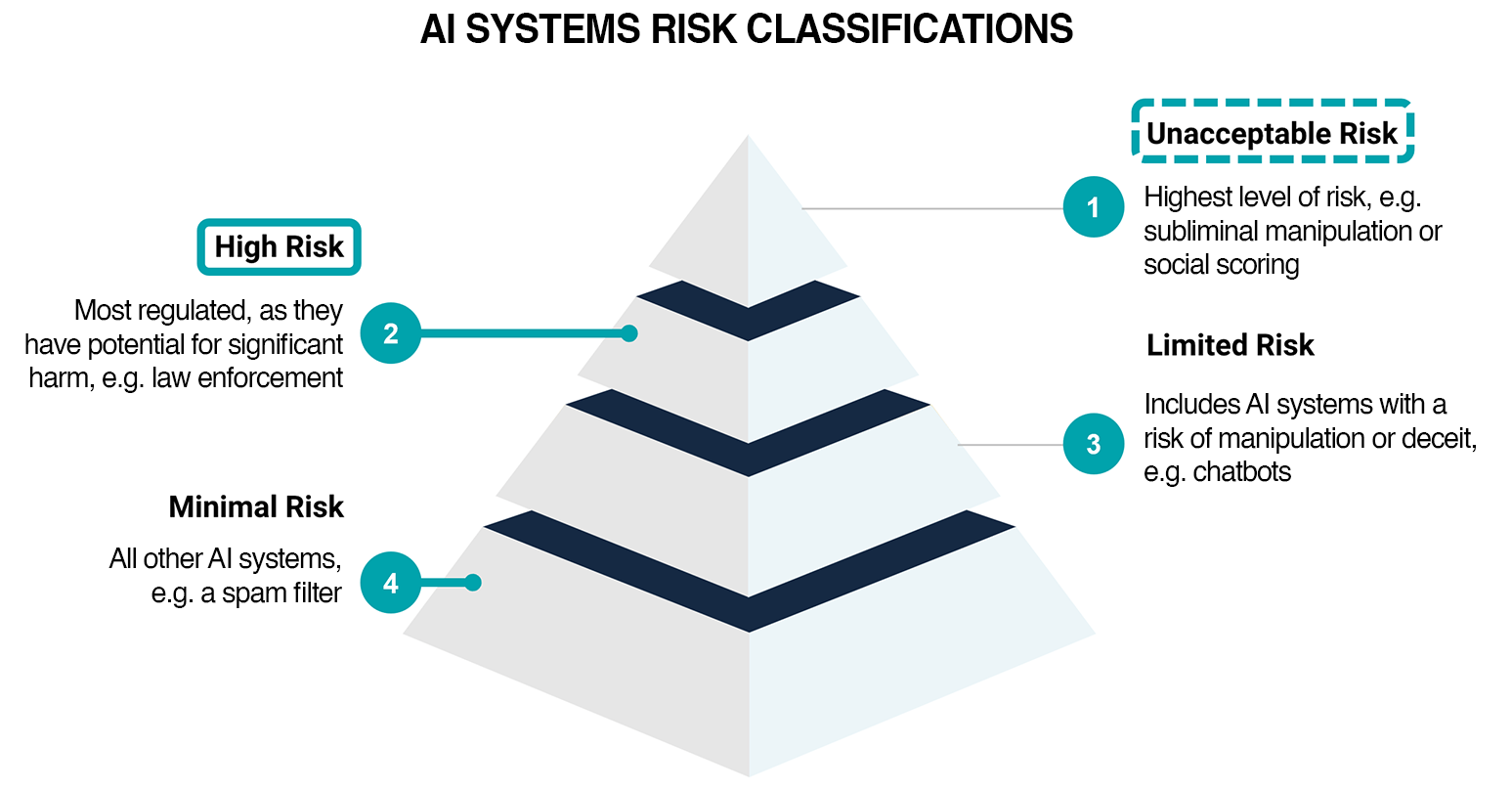

This blog outlines JSI’s approach to achieving and maintaining compliance with global AI regulatory frameworks, using the European Union’s Artificial Intelligence Act (EU AI Act) as a guiding standard. As the world’s first comprehensive AI law, the EU AI Act sets a precedent for AI governance, establishing requirements that are expected to influence AI regulatory developments worldwide.

In March 2024, the European Parliament, representing 27 EU Member States, approved the final draft of the EU AI Act. Following its formal approval by the Council of the EU in May 2024, the Act came into force on July 12, 2024. Its rigorous classification of AI systems, particularly the ‘high-risk’ category, provides a robust framework for ensuring transparency, safety, and accountability—principles that are echoed in emerging AI regulations across other regions

At JSI, we recognize that our customers in Law Enforcement and Intelligence rely on AI tools to solve critical, time-sensitive challenges. Given the high-stakes nature of these tools, JSI has committed to treating all current and future AI capabilities within our 4Sight platform as ‘high-risk systems’, as defined by the EU AI Act. This ensures we proactively align with the strictest global standards, delivering AI solutions that are secure, reliable, and compliant.

By leveraging the EU AI Act as our benchmark, this whitepaper highlights JSI’s strategy to meet and exceed the key requirements for building trustworthy AI systems—supporting our customers wherever they operate in an evolving regulatory landscape.

Definitions

To ensure clarity and consistency, the following definitions are provided as they are drawn from the EU AI Act. While specific to the language of the EU AI Act, these definitions reflect key concepts that are increasingly adopted within emerging AI frameworks worldwide.

- AI System: “means a machine-based system designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments.”

- High-Risk AI Systems: “AI systems referred to in Annex III shall also be considered high-risk” [Article 6, EU AI Act], including those “used by or on behalf of law enforcement authorities … for

profiling of natural persons … in the course of detection, investigation, or prosecution of criminal offenses.” - Provider: “means a natural or legal person, public authority, agency or other body that develops an AI system or a general-purpose AI model or that has an AI system or a general purpose AI model developed and places them on the market or puts the system into service under its own name or trademark, whether for payment or free of charge.”

- Deployer: “means any natural or legal person, public authority, agency or other body using an AI system under its authority except where the AI system is used in the course of a personal non-professional activity.”

- Profiling: “means any form of automated processing of personal data…” as defined in the case of law enforcement authorities in Article 4, point (4), of Regulation (EU) 2016/679 [Article 3, EU AI Act]

- Personal Data: “any information relating to an identified or identifiable natural person; an identifiable natural person is one who can be identified, directly or indirectly, in particular by reference to an identifier such as a name, an identification number, location data, an online identifier or to one or more factors specific to the physical, physiological, genetic, mental, economic, cultural or social identity of that natural person.”

- Biometric Identification: “means the automated recognition of physical, physiological, behavioural, and psychological human features for the purpose of establishing an individual’s identity by comparing biometric data of that individual to stored biometric data of individuals in a database.

AI Compliance in 4Sight

AI regulations set out specific requirements for high-risk AI systems to ensure they are safe, transparent, and accountable. These requirements establish the standards that govern the development, deployment, and ongoing monitoring of AI systems, focusing on areas like risk management, data governance, and system robustness. With the EU AI Act setting a standard for governing high-risk AI systems, JSI has built 4Sight’s compliance strategy to adhere with its requirements. This proactive alignment with the EU’s stringent regulations positions JSI to quickly adapt and comply with emerging AI legislation worldwide as it evolves.

The following EU AI Act requirements and JSI’s corresponding compliance strategy outline how we ensure 4Sight meets these standards and remains a trusted, responsible platform for global use:

Requirement 1 | A risk management system shall be established, implemented, documented and maintained in relation to high-risk AI systems.

JSI has established a risk management process for each AI capability the company has brought and will bring to market. This includes ongoing evaluation of the AI capability throughout the development lifecycle to identify and document all potential risks, ensuring all aspects of the AI capability are considered throughout this process. The output of the risk management process is a document for each AI capability outlining all evaluation findings, which is regularly updated and made available to JSI customers for transparency, enabling appropriate product use.

Requirement 2 | High-risk AI systems which make use of techniques involving the training of models with data shall be developed on the basis of training, validation and testing data sets that meet the quality criteria referred to in paragraphs 2 to 5 whenever such datasets are used.

For all high-risk AI systems that employ model training techniques, JSI has implemented a robust data governance process to ensure that the training, validation, and testing datasets meet the quality criteria outlined in paragraphs 2 to 5. This process ensures:

- High-Quality Data: All datasets are of high quality, well-labeled, and possess the appropriate

statistical properties. - Tailored for Use: Each dataset is specifically tailored to the intended use case.

- Bias Evaluation: Datasets are thoroughly evaluated for characteristics that may introduce bias or

other shortcomings in the final model.

For activities where model training or fine-tuning are required, all evaluation results will be comprehensively documented and made available to relevant customers for full transparency.

Requirement 3 | The technical documentation of a high-risk AI system shall be drawn up before that system is placed on the market or put into service and shall be kept up-to date. [It] shall be drawn up in such a way to demonstrate that the high-risk AI system provides national competent authorities and notified bodies with the necessary information in a clear and comprehensive form to assess the compliance of the AI system with those requirements.

As part of the feature release process, JSI makes technical documentation available for customers to ensure end users are well-versed on the purpose of the feature, how to use it appropriately, levels of accuracy, its limitations, and how to interpret the output of an AI system. For complete compliance with this requirement, however, JSI updates the technical documentation related to high-risk AI capabilities to ensure that all relevant elements of Annex IV are included and available for customers and national authorities to use as a reference when assessing compliance.

Requirement 4 | High-risk AI systems shall technically allow for the automatic recording of events (‘logs’) over the duration of the lifetime of the system.

To affirm compliance with this requirement, JSI will continue to validate that all usage logs of AI capabilities will minimally contain details such as when the system was used, any reference databases that were checked, the input data used for processing, and the recording of which users verified the results. This activity is already standard practice within the 4Sight platform and will continue to be utilized by all AI capabilities developed by JSI.

Requirement 5 | High-risk AI systems shall be designed and developed in such a way to ensure that their operation is sufficiently transparent to enable deployers to interpret the system’s output and use it appropriately. An appropriate type and degree of transparency shall be ensured with a view to achieving compliance with the relevant obligations of the provider.

Given the critical nature of JSI’s customer operations, all AI capabilities developed by JSI are optimized for use within an operational environment—this includes a strong emphasis placed on user experience to ensure that usage of the capability and interpretation of its results are clear and well-understood by end users as well as in-built operational constraints, where appropriate.

In addition to this (and as described in more detail above), JSI provides customers with clear documentation and 24/7 technical support to guide and inform its users of the purpose of the provided AI capabilities, accuracy metrics for supported input data sources, identified risks or biases as uncovered throughout the Risk Management and Data Governance processes in order to avoid negative consequences or risks, and more. Additionally, dedicated training on AI capabilities is provided to all applicable customers to ensure that end users make appropriate and effective use of the tools as they were intended to be used.

Requirement 6 | High-risk AI systems shall be designed and developed in such a way, including with appropriate human-machine interface tools, that they can be effectively overseen by natural persons during the period in which the AI system is in use.

Including human oversight within provided AI capabilities is an extremely important requirement for JSI, given the high-risk nature of the types of data being analyzed by our customers. To this end, there are two main methods used to achieve this: disclaimers of capacities and limitations, and custom process fields for verification.

For all AI-generated or predicted results presented to end users, a strong and explicit disclaimer is included directly with these results to ensure that end users are fully aware that the content was produced by an AI model, not produced by a qualified natural person. As AI-produced results are made available to end users, additional customizable process fields are further made available so results can be used in a structured way as part of Standard Operating Procedures and/or human verification. While JSI will leave the specifics of this verification process to our customers (the “deployers”), guidance and best practices will still be provided as part of training and user documentation to help maximize the utility of provided AI capabilities.

Requirement 7 | High-risk AI systems shall be designed and developed in such a way that they achieve an appropriate level of accuracy, robustness, and cybersecurity, and perform consistently in those respects throughout their lifecycle.

As part of the software development lifecycle associated with AI capabilities, JSI conducts a thorough evaluation of the selected AI models and benchmark their levels of accuracy against custom datasets that are appropriately indicative of the real-world environment in which the AI capability will be deployed. Results of this evaluation are included within customer-facing documentation to make customers aware of situations in which the AI capability performs well (and conversely the situations where it does not).

Another aspect of the model evaluation process included within JSI’s current practices is the use of sophisticated, industry-leading security scanning tools to ensure that we are aware of and have a plan to mitigate security vulnerabilities, especially within any open-source projects being used. These tools are also applied to the 4Sight platform, providing a holistic understanding of the cybersecurity landscape across the platform.

From a robustness perspective, JSI considers a wide array of implicit requirements in all feature development—AI or otherwise. This includes consideration for non-functional requirements such as scalability, performance, usability, security, and availability. In terms of robust deployments of AI capabilities, the 4Sight platform delivers resiliency across unforeseen errors and faults within the system to ensure that mission critical services continue to function where possible (and that mitigation plans are either automatically triggered, or alerts are raised to administrators to handle in a timely manner).

Exempt AI Capabilities

Per Article 5 of the EU Act, certain AI practices are prohibited, including capabilities that rely heavily on biometric data, such as Speaker and Facial Identification; these capabilities are subject to specific

regulatory restrictions. However, exemptions exist, particularly for use by law enforcement and intelligence agencies. These exemptions allow for the use of such capabilities in the following scenarios:

- Use by a Law Enforcement Agency with court approval

- Use in the search for victims of a crime

- Use in the prevention of imminent threats to life

- Use in the detection of a suspect of a serious criminal offense

- Use in the prevention of other serious risk of harm

It is important to note that the scope of these exemptions may vary depending on local regulations. In some jurisdictions, individual governments may choose to expand or narrow the scope of permissible use for these capabilities. JSI ensures that our customers, regardless of location, can remain compliant with applicable regulations when deploying these capabilities.

Wrap-Up

As a provider of high-risk AI systems, JSI is dedicated to ensuring that all AI solutions we offer comply with the regulations in each of our customers’ respective countries. By aligning our compliance strategy with the EU AI Act, JSI is confident in our ability to meet the requirements of emerging global compliance frameworks. Transparency remains a cornerstone of our approach, empowering our customers and their oversight bodies with the confidence that JSI’s AI capabilities are designed, maintained, and implemented to the highest standards of safety, accountability, and regulatory compliance.